My current project is for a company called HDMP (The website is in Dutch / French). We make software for medical practitioners. I am working on a service that communicates with web services created by the Belgian government. The services are quite old, proven by the fact that they expect as input a flat file with specific record formats, the so called efact format. I am parsing this file using the excellent FileHelpers package, which allows describing fixed length file formats. This article is only about the code generation, not about the use of the Filehelpers library.

In the efact file there over 10 different record formats, all with a fixed length of 370 bytes. Fields in a record are positional. To describe this I created a more readable Domain Specific Language in F#. In this article I will demonstrate how this works. The code for this article can be found on GVerelst/CodeGen: F# DSL for C#Code generation. (github.com).

Prerequisites

- You’ll need a little bit of C# knowledge to follow this post. In particular, I will show how to create a couple of C# classes, with some (custom) attributes. But in the end the F# program will just generate some text that happens to be C# code.

- F# knowledge will help, but I will explain most of what I’m doing in this post.

The Problem

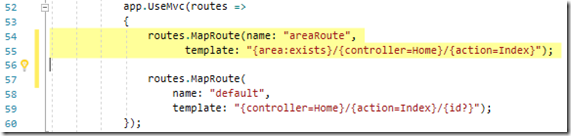

The code that is needed for FileHelpers to work with fixed records looks like this:

// //////////////////////////////////////

// FileInfoBase

// //////////////////////////////////////

[FixedLengthRecord()]

public partial class FileInfoBase

{

// Segment segment200

[EFactMetadata("200", "6N", "1-6", "Naam van het bericht", "Nom du message"), FieldFixedLength(6), FieldAlign(AlignMode.Right, '0')] public int MessageName { get; set; } = 920000; // 920000|920900|...

[EFactMetadata("2001", "2N", "7-8", "Code fout", "Code érreur"), FieldFixedLength(2), FieldAlign(AlignMode.Right, '0')] public byte Error2001 { get; set; } = 0;

[EFactMetadata("201", "2N", "9-10", "Versienummer formaat van het bericht", "N° version du format du message"), FieldFixedLength(2), FieldAlign(AlignMode.Right, '0')] public byte MessageVersionNumber { get; set; } // 2

// ...

[EFactMetadata("204", "14N", "21-34", "Referentie bericht ziekenhuis", "Reference du message"), FieldFixedLength(14), FieldTrim(TrimMode.Both)] public string InputReference { get; set; } = new string('0', 14);

// ...

[EFactMetadata("3091", "2N", "206-207", "Code fout", "Code érreur"), FieldFixedLength(2), FieldAlign(AlignMode.Right, '0')] public byte Error3091 { get; set; } = 0;

}

We describe the record fields mainly using attributes:

- The

FileHelperattributes that describe the format of the field - The

EFactMetadatacustom attribute that gives some additional information about the field. It contains the name of the zone (ex: “200”. Remember, this is an archaic record format), the type of the zone (ex: “6N” or “45A”), the position in the record (calculated), and then the translation in Dutch and French. - And in addition we also give the field a data type, a name, an optional default value and an optional comment.

The documentation describes the file format using these terms. I also created a file viewer to show the contents in a user-friendly way, hence the translated fields. This will not be on GitHub. The attributes allow the use of reflection in the user interface to show the file format. The documentation also uses a notion of segments to describe a block in the record formats that can be reused in similar record formats. We want to mimic this behavior as well.

Clearly this is a lot of error prone code to type, and also not very readable because of all the clutter. If only we could represent this in a more concise and readable way, and generate the necessary code from this …

Defining the internal Domain Specific Language in F#

Looking at this, we can see some needed entities. The first thing we need to describe is a zone (which will translate into a property in the generated class. For the first zone (“200”) this can look like

Z "200" (N 6) "Dutch name" "French name" Int "PropertyName" "920000" "920000|920900|..."

This contains all the data we need to describe a field with all its attributes. Let’s create the Zone type:

type Zone = { zone: string; length: Length; nl: string; fr: string; datatype: Datatypes; name: string; defaultvalue: string; comments: string}

and a constructor for this type:

let Z zone length nl fr dt name dft comments =

{ zone=zone; length = length; nl= nl; fr = fr; datatype=dt; name=name; defaultvalue=dft; comments=comments }

- zone: the zone name (being “200”, “2001”, …)

- length: definition of the length (ex N 6 indicates 6 digits, A 5 indicates 5 characters)

- nl: description in Dutch

- fr: description in French

- The rest of the fields are clear.

This is valid F# code, embedded in our project. The nice thing is that the compiler will prevent a lot of errors for us. This is what is called an “internal DSL”. An external DSL describes a separate language, with its own syntax rules. This means that the interpretation of the external DSL needs to be written as well.

There are some unknown parts in there:

Z "200" (N 6) "Naam van het bericht" "Nom du message" Int "PropertyName" "920000" "920000|920900|..."

The datatype is Int, we must describe this as well. This could have been just a string, but that doesn’t allow for validation. Ideally we want the F# compiler to catch as many errors as possible before we start to generate the code. So here is the Datatypes enumeration:

type Datatypes = Bool | CRC | Byte | Short | Int | DateTime | Time | String | Money | AmbHos | Gender | Error

Now the F# compiler will only allow these datatypes. Depending on the datatype we can generate slightly different C# code. Example:

Int generates this:

[EFactMetadata("200", "6N", "1-6", "Naam van het bericht", "Nom du message"), FieldFixedLength(6), FieldAlign(AlignMode.Right, '0')] public int MessageName { get; set; } = 920000; // 920000|920900|...

And String will generate this:

[EFactMetadata("204", "14N", "21-34", "Referentie bericht ziekenhuis", "Reference du message"), FieldFixedLength(14), FieldTrim(TrimMode.Both)] public string InputReference { get; set; } = new string('0', 14);

Of course the other datatypes generate their own versions.

Having this in place already reduces the number of hard to find errors in the C# code.

Z "200" (N 6) "Naam van het bericht" "Nom du message" Int "PropertyName" "920000" "920000|920900|..."

We also see a Length. The constructor (N 6) is actually composed of the length type (“A” is alphabetic, “N” is numeric, “S” is numeric, but prefixed with ‘+’ or ‘-‘. This will later be used in the code generation. Let’s describe this:

type LengthType = A | N | S

type Length = { ltype: LengthType; length: int }

and we create 3 constructor functions:

let N x = { ltype= N; length= x }

let A x = { ltype= A; length= x }

let S x = { ltype= S; length= x }

N 6 will now return a new Length record with ltype = N, length = 6. Having these 3 little functions allows the F# again to validate the code at compile time.

Recap of the definition of the zone so far:

type Datatypes = Bool | CRC | Byte | Short | Int | DateTime | Time | String | Money | AmbHos | Gender | Error

type LengthType = A | N | S

type Length = { ltype: LengthType; length: int }

type Zone = { zone: string; length: Length; nl: string; fr: string; datatype: Datatypes; name: string; defaultvalue: string; comments: string}

let N x = { ltype= N; length= x }

let A x = { ltype= A; length= x }

let S x = { ltype= S; length= x }

let Z zone length nl fr dt name dft comments =

{ zone=zone; length = length; nl= nl; fr = fr; datatype=dt; name=name; defaultvalue=dft; comments=comments }

These 9 lines of code allow us to create zones in a concise and clear way. Let’s add semantics to this. Some zones are of the same type, and have a specific meaning. For example I defined the Recordtype function as

let Recordtype rectype zone =

let rt = rectype.ToString()

Z zone (N 2) ("recordtype " + rt) ("enregistrement de type " + rt) Byte "Recordtype" rt ("Always " + rt);

Every efact record will have a specific record type, the 2 first bytes of the record. They always have the same NL and FR description, so I made a new function for this. The function on itself is not to save typing, but to give semantics to this field.

Recordtype 95 "400"

Indicates a record that is a record type. We can also write this out in the code as

Z "40" (N 2) "recordtype 95" " enregistrement de type 95" Byte "Recordtype" "95" "Always 95"

It is not much longer (copy / paste is your friend here), but a lot clearer on what it means. So in the same style I defined Mutuality:

let Mutuality zone =

Z zone (N 3) "Nummer mutualiteit" "Numéro de Mutualité" Int "MutualityNumber" "" ""

And again

Mutuality "401"

indicates very clearly what we mean here. I made some more:

let Errorcode zone =

let nzone = normalizeName zone

Z zone (N 2) "Code fout" "Code érreur" Error ("Error" + nzone) "0" ""

let Reserved l zone =

let nzone = normalizeName zone

let dft = match l.ltype with

| A -> sprintf "new string(' ', %d)" l.length

| N -> sprintf "new string('0', %d)" l.length

| S -> sprintf "'+' + new string('0', %d)" (l.length - 1)

Z zone l "Reserve" "Reserve" String ("Reserved" + nzone) dft ""

As you can see, a reserved zone can only be of datatypes A | N | S. For each of the cases I defined the outcome. No more need to think about what kind of attributes need to be generated, and it is clear that this is a zone that is there as a filler, in case more zones would be needed in the future (remember, this is an archaic format).

This now gives us a (domain specific) language to describe the records, for example:

Recordtype 95 "400"

Errorcode "4001"

Mutuality "401"

Errorcode "4011"

Z "402" (N 12) "Nummer van verzamelfactuur" "Numéro de facture récapitulative" String "RecapInvoiceNumber" "" ""

Errorcode "4021"

// ...

Reserved (N 257) "413"

Now we have a way to describe the zones in the flat file that will be converted into properties in a C# class. Let’s extend the DSL to include classes. In the eFact documentation there are some predefined structures called segments. A segment has a name and is composed of 1 or more zones. These segments will be put together in a class. So a class is a named collection of segments, and a segment is a named collection of zones. A class can also inherit from another class, which saves some more typing. A namespace is a named collection of classes, and finally a program (I didn’t find a better name for this) is composed of namespaces, and has a filename.

Here are the definitions:

type Segment = { name: string; zones: Zone list }

type Interface = { name: string; lines: string list }

type Record = { name: string; inherits: Record option; implements: Interface list; segments: Segment list }

type Namespace = { name: string; records: Record list }

type Program = { filename: string; baseNamespace: string; namespaces: Namespace list }

Let’s define a small program

let segment200 =

{

name= "segment200";

zones=

[

Z "200" (N 6) "Naam van het bericht" "Nom du message" Int " MessageName" "920000" "920000|920900|..."

Errorcode "2001"

Z "201" (N 2) "Versienummer formaat van het bericht" "N° version du format du message" Byte " MessageVersionNumber" "" "2"

Errorcode "2011"

// ...

Z "205" (N 14) "Referentie bericht VI" "Reference du message OA" String " ReferenceOA" "" ""

Errorcode "2051"

Reserved (N 15) "206"

]

}

let segment300 =

{

name= "segment300";

zones=

[

Z "300a" (N 4) "Factureringsjaar" "Année de facturation" Int "YearBilled" "" ""

Z "300b" (N 2) "Factureringsmaand" "Mois de facturation" Byte "MonthBilled" "" ""

Errorcode "3001"

Z "301" (N 3) "Nummer van de verzendingen" "Numero d''envoi" Int " RequestNr" "" ""

Errorcode "3011"

Z "302" (N 8) "Datum opmaak factuur" "Date de création de facture" DateTime " Creationdate" "" ""

Errorcode "3021"

// ...

Z "309" (N 2) "Type facturering" "Type facturation" Byte "Invoicingtype" "" ""

Errorcode "3091"

]

}

let fileInfoBase =

{

name= "FileInfoBase";

inherits = None;

implements = [];

segments=

[

segment200

segment300

]

}

let fileInfo =

{

name= "FileInfo";

inherits = Some fileInfoBase;

implements = [];

segments=

[

segment300a

]

}

// ...

let namespaceRequests =

{

name="Requests";

records=

[

fileInfoBase

fileInfo

// ...

]

}

let namespaceSettlement =

{

name="Settlement";

records=

[

// ...

]

}

let prog =

{

filename="eFact.cs";

baseNamespace="HdmpCloud.eHealth.eFact.Serializer.Recordformats.";

namespaces =

[

namespaceRequests

namespaceSettlement

]

}

As you can see, the definition of all the needed datatypes is about 10 lines, and very readable:

type Datatypes = Bool | CRC | Byte | Short | Int | DateTime | Time | String | Money | AmbHos | Gender | Error

type LengthType = A | N | S

type Length = { ltype: LengthType; length: int }

type Zone = { zone: string; length: Length; nl: string; fr: string; datatype: Datatypes; name: string; defaultvalue: string; comments: string}

type Segment = { name: string; zones: Zone list }

type Interface = { name: string; lines: string list }

type Record = { name: string; inherits: Record option; implements: Interface list; segments: Segment list }

type Namespace = { name: string; records: Record list }

type Program = { filename: string; baseNamespace: string; namespaces: Namespace list }

Then we defined some helper functions to make the definition of the zones a bit easier, and to give it semantic meaning. And now we have described the zones, segments, records, namespaces and the program. This is done in about 1000 lines of code.

Let’s generate some C#

Nice. We have described our language (DSL), and we have described what our C# classes should look like. We can compile this program, and if it succeeds we know that the program in our DSL is syntactically correct. Time to generate the code, so this becomes useful.

To start, let’s output a Zone. This will be output as a property in a C# class. Don’t mind the pos parameter yet.

let outputZone pos zone =

let (declaration, att3) = outputDeclaration zone

let att1 = outputEFactMetadata zone pos

let att2 = sprintf "FieldFixedLength(%d)" zone.length.length

let attslist = [ att1; att2; att3 ]

let atts = attslist |> List.reduce (fun a b -> a + ", " + b)

let comment = if zone.comments.Length = 0 then "" else (C2 zone.comments)

"[" + atts + "] " + declaration + (outputDefaultValue zone) + " " + comment

As you can see, there are some helper functions here. I’ll discuss them below.

The outputZone function takes 2 parameters: pos and zone. The output is a string describing a C# property with the necessary attributes. This is the central function in the code generation. The output type of this function is a string. In the end the generated program will just be a list of strings to be written into a file.

In F# a function can only be used if it was defined before the calling function. At first this is a pain, but it forces you to have a correct dependency structure. Typically this results in a list of small functions that are composed into more useful functions. Let’s look at some of the functions in “generator.fs”, which contains the code to generate the C# classes.

Very simple function to generate the string “5N” from the type (N 5):

let outputLength (l: Length) =

sprintf "%d%A" l.length l.ltype

Make the first character of a string uppercase:

let captitalize (s:string) =

if s.Length = 0 then ""

else s.Substring(0,1).ToUpper() + s.Substring(1)

Create the EFactMetadata attribute:

// EFactMetadata("312", "449", "352-800", "Reserve", "Reserve")

let outputEFactMetadata zone pos =

let nl = captitalize zone.nl

let fr = captitalize zone.fr

let rng = sprintf "%d-%d" pos (pos + zone.length.length - 1)

sprintf "EFactMetadata(\"%s\", \"%s\", \"%s\", \"%s\", \"%s\")" zone.zone (outputLength zone.length) rng nl fr

The function is straightforward thanks to the use of the small helpers.

// public string Reserve9 { get; set; } = new string(' ', 449);

let outputDeclaration zone =

let (dt, att) = match zone.datatype with

| CRC -> ("byte", "FieldTrim(TrimMode.Both)")

| Int -> ("int", if (zone.length.ltype = LengthType.S )

then sprintf "FieldConverter(typeof(SignedIntConverter), %d)" zone.length.length

else "FieldAlign(AlignMode.Right, '0')")

// ...

| Gender -> ("Gender", "FieldConverter(typeof(EnumIntConverter),1)")

(sprintf "public %s %s { get; set; }" dt zone.name, att)

// [EFactMetadata("312", "449", "352-800", "Reserve", "Reserve"), FieldFixedLength(450), FieldValueDiscarded] public string Reserve9 { get; set; } = new string(' ', 449);

let outputZone pos zone =

let (declaration, att3) = outputDeclaration zone

let att1 = outputEFactMetadata zone pos

let att2 = sprintf "FieldFixedLength(%d)" zone.length.length

let attslist = [ att1; att2; att3 ]

let atts = attslist |> List.reduce (fun a b -> a + ", " + b)

let comment = if zone.comments.Length = 0 then "" else (C2 zone.comments)

"[" + atts + "] " + declaration + (outputDefaultValue zone) + " " + comment

The first function with some logic in it: outputSegment

We want to output a segment, which is a number of zones. There will be a loop to cover all the zones, but in functional programming we avoid loops as much as possible. F# provides us with a lot of functions to handle collections.

The output we want is not just a line for each zone, but given that eFact files are records with fixed-length fields, we also want to indicate the position of the field in the record. We saw before that each record has a length, this allows us to calculate the positions. Here is some partial output of a zone:

// Segment segment200

[EFactMetadata("200", "6N", "1-6", "Naam van het bericht", "Nom du message"), FieldFixedLength(6), FieldAlign(AlignMode.Right, '0')] public int MessageName { get; set; } = 920000; // 920000|920900|...

[EFactMetadata("2001", "2N", "7-8", "Code fout", "Code érreur"), FieldFixedLength(2), FieldAlign(AlignMode.Right, '0')] public byte Error2001 { get; set; } = 0;

[EFactMetadata("201", "2N", "9-10", "Versienummer formaat van het bericht", "N° version du format du message"), FieldFixedLength(2), FieldAlign(AlignMode.Right, '0')] public byte MessageVersionNumber { get; set; } // 2

[EFactMetadata("2011", "2N", "11-12", "Code fout", "Code érreur"), FieldFixedLength(2), FieldAlign(AlignMode.Right, '0')] public byte Error2011 { get; set; } = 0;

Notice the 3rd parameter of the eFactMetadata attribute (“1-6”, “7-8”, “9-10”, “11-12”, …). This is a running total that is calculated using a start position and the lengths of the zones. Remember that the outputZone function takes a “pos” parameter, this explains why. Here is the function:

let outputSegment start (seg: Segment) =

let (endpos, lines) =

seg.zones |> List.fold (fun (pos, lines) z ->

let z2 = outputZone pos z

(pos + z.length.length, z2::lines)

) (start, [])

let zs2 = (C2 ("Segment " + seg.name)) :: (lines |> List.rev)

(endpos, zs2)

A record is composed of one or multiple segments, so we need a start position and we return the end position for this segment. Later the outputRecord function will use the same trick as we use here for the position in the EfactMetadata attribute.

The main loop is implemented in the List.fold function:

seg.zones |> List.fold (fun (pos, lines) z ->

let z2 = outputZone pos z

(pos + z.length.length, z2::lines)

) (start, [])

Taking the collection of zones as its input, List.fold will iterate over each zone and apply an accumulator function to it. The accumulator is the tuple (pos, lines), which indicates that we are accumulating 2 things at the same time: the position and the generated lines.

let z2 = outputZone pos z // generates the line for the current position

(pos + z.length.length, z2::lines) // returns pos plus the length of the zone and the generated line in front of all the lines that were already generated

The result is that we now have our lines with the position correctly filled, but in reverse order. This explains the following line:

let zs2 = (C2 ("Segment " + seg.name)) :: (lines |> List.rev)

If you like you can read the rest of the code on GitHub. Most of the code is straightforward from this point on.

More enhancements

One simple enhancement is this:

let C2 s = "// " + s

Now we can generate comments like C2 “Segment 200”.

Errorcodes

In the efact format there are many Errorcode fields. They always look the same:

Z “2001” (N 2) "Code fout" "Code érreur" Error ("Error" + nzone) "0" ""

This is always a 2-digit field (N 2), so we can define a new function for this:

let Errorcode zone =

let nzone = normalizeName zone

Z zone (N 2) "Code fout" "Code érreur" Error ("Error" + nzone) "0" ""

Errorcode “2001” will now create a 2-digit zone in a descriptive way.

Reserved zones

There are also 2 types of reserved zones: numeric and alphabetic. Depending on their type they will be filled up with different values. They are the FILLERS in good old COBOL (and yes, this says something about my age).

To describe them we make another function:

let Reserved l zone =

let nzone = normalizeName zone

let dft = match l.ltype with

| A -> sprintf "new string(' ', %d)" l.length

| N -> sprintf "new string('0', %d)" l.length

| S -> sprintf "'+' + new string('0', %d)" (l.length - 1)

Z zone l "Reserve" "Reserve" String ("Reserved" + nzone) dft ""

Conclusion

Describing the data model for the classes to be generated takes about15 lines of code. Then we defined a couple of small helper functions and some bigger functions to generate the code. The generator.fs file contains 163 lines of code. With this we can describe our program in a readable way. We also added some semantics to the code with constructor functions to describe fillers, errors, a mutuality, … I think this is a nice demonstration of F# as a functional language.

If you don’t have an Azure account yet, there are some ways to get a free test account. You can surf to

If you don’t have an Azure account yet, there are some ways to get a free test account. You can surf to  Sysprep can be used with parameters (when you know what you are doing), or just without parameters, which will pop up a little form. In the screenshot, you can see the form with the right values filled in:

Sysprep can be used with parameters (when you know what you are doing), or just without parameters, which will pop up a little form. In the screenshot, you can see the form with the right values filled in:

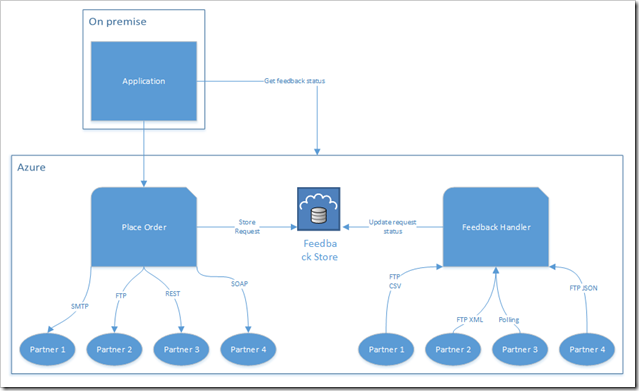

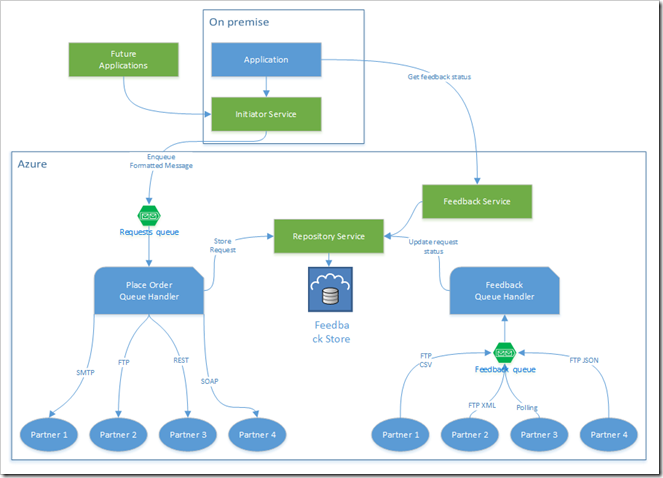

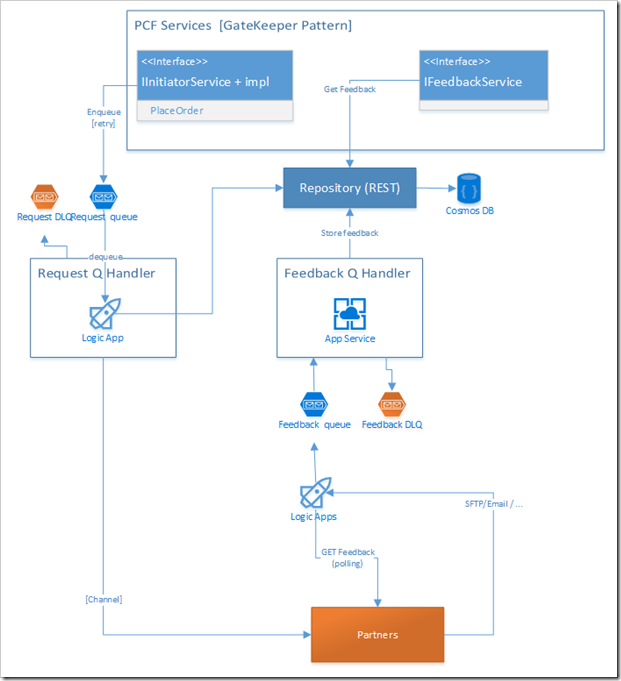

I started working on a C# project that will communicate requests to several different partners, and receive feedback from them. Each partner will receive requests in their own way. This means that sending requests can (currently) be done by

I started working on a C# project that will communicate requests to several different partners, and receive feedback from them. Each partner will receive requests in their own way. This means that sending requests can (currently) be done by